Elasticsearch Greatest Hits or Lessons learnt doing full-text search

This blogpost concentrates on what might deserve some thought when optimizing indexing, mapping and querying. It requires a basic understanding of what Elasticsearch does. For a refresh check the official guide.

Before we start off, three useful general rules:

-

Define ‘good search’

When implementing a search make sure you know the desired result. Looking at online shops we can easily see that search always involves some kind of marketing, which is true for other applications as well. So if you are not the project owner, keep in close touch with the management and find out what results they expect. This way you can find out early on if contradicting expectations turn up.

-

Get your hands on actual data

To get meaningful results it is crucial to have access to actual data. Try to obtain it. Because there will be surprises which you otherwise won’t find out about.

-

Don't try to be perfect

Elasticsearch lets you start out relatively easy. But it can also lead you down the rabbit hole if you are trying to get perfect results. Keep in mind that scores are entirely relative. Every data set is different, as are user expectations. Don't get lost; deploy at some point and maybe keep stats of what people are searching, where they get zero results and where they get too many. With this newfound knowledge you can come back at any time and optimize.

Back to the technicalities:

Problem

Speakerinnen Liste (GitHub) 1, a platform where conference organizers can find women experts, wanted to improve search by introducing Elasticsearch. The goal was to create one single search field where anything could be entered and one or more speakers would be found. In our particular case this might be a name, a field of expertise, a twitter handle, a place or language. Or maybe even something from the speaker's biography. Or any combination of these possibilities.

What's tricky here is that we don’t know what the user will enter, so we will have to run the input on all fields. We also don’t know the language of the entries (currently mostly English and German).

In order to have a nicer user experience we decided to offer a facetted search: the search results can be filtered for cities and languages.

The two main things we need to get right:

- Mapping the fields of the speaker profiles as this builds the basis for what can be searched for.

- Build a score-based query to weigh importance of fields. This should be done in a way that no matter what the users enter, the query returns the hits we expect in the order we wish to see.

All of this requires understanding the data at hand as well as predicting user behavior.

The maintainers had decided they wanted to use the elasticsearch-rails gem for easier integration with ActiveRecord and we took it from there. Since at the time we started out the gem only supported Elasticsearch up to version 2.4 this is the version I am talking about in this post.

Indexing / Mapping / Analyzers

Starting out by playing around with a very basic setup is not a bad idea. After a while you want to think about the following:

-

Index everything?

When looking at data identify what you want to be able to search for. You probably have more in your database than you want to make searchable. Do not index those fields! In our case there is private data like email addresses which we don't want to add to the public search.

-

Analyze everything?

Find out which fields you don’t want to analyze. These fields are searchable but take the value exactly as specified. This is useful for filtering if you don’t need scoring. E.g. we need to return cities in their original spelling for the facetted search, so the field

cities.unmodifiedis beingnot_analyzed. -

Disable norms?

Are there any fields whose length can vary a lot? This can lead to unwelcome results: in our case the field

topic_listcontains topics the speakers are available to talk about. Some speakers might enter 20 topics, others might enter only two or even just one. If field length is taken into account, shorter fields are weighted higher. This means that a hit on the same topic would get a much higher score for speakers with a short list compared to speakers with a longer list. Disabling field-length norms solves this while still allowing for analyzing and scoring.

Get The Right Analyzers

To get a feeling for your dataset run the standard analyzer on everything and go from there. You’ll probably already see what is fine and what needs some tweaking.

There are several ready-made analyzers available or you can make custom ones yourself. Following I’ll only mention a few as examples.

Let’s have a closer look at Speakerinnen Liste: The fields country and topic_list are probably fine with a standard analyzer. (It lowercases and filters out stop words like “a”, “an”, “and”, “are”…).

Pattern Matching

But what about dealing with Twitter handles? In this field we don’t know if the users will add the @ or not. Besides lowercasing everything, we want to get rid not only of the @ but also of any other special characters. (Even though Twitter allows underscores, the users might forget about them or misspell. This way we are on the safe side – worst case we get several matches which is better than getting none.)

We achieve this by building our own analyzer:

char_filter: {

strip_twitter: {

type: 'pattern_replace',

pattern: '[^A-Za-z0-9]',

replacement: ''

}

},

analyzer: {

twitter_analyzer: {

type: 'custom',

char_filter: ['strip_twitter'],

tokenizer: 'keyword',

filter: ['lowercase']

}

}

First, our custom char_filter looks per regex for anything that is not a letter or digit and strips it (by replacing it with an empty string).

The analyzer then uses the keyword tokenizer which turns any given text into one single term. Finally the lowercase filter lowercases everything.

Watch out: Be aware that char_filters are applied before tokenization which means before the normal filters. In this case we have to explicitly add uppercase letters in the regex [^A-Z], otherwise these will get stripped as well since the lowercasing happens as the last step.

Check your analyzers

To find out if the mapping worked as intended consult one of the available plugins.

I used kopf, which apparently isn’t maintained any more but worked just fine for me. Hidden under more you’ll find the point analysis.

An alternative is the more current cerebro from the same author. (X-Men fan I take it?)

These are great tools to have a look at all your analyzed fields.

Simply try out what the analyzers produce!

@dID/*&^I_t!#<$wOrk?123 should become diditwork123.

Great, it did work!

Synonyms

When dealing with full-text search you might want to use synonyms. Choosing synonyms requires anticipating what your users will look for and knowing your data sets. In our case people might explicitly look for speakers with a ‘phd’. Since the application is used by many German speakers they probably also look for a ‘Dr.’. If you only have a few synonyms, these can be added directly in the mapping like so:

analysis: {

filter: {

synonym_filter: {

type: 'synonym',

synonyms: [

'phd,dr.,dr'

]

}

},

analyzer: {

fullname_analyzer: {

type: 'custom',

tokenizer: 'standard',

filter: [

'asciifolding',

'lowercase',

'synonym_filter'

]

}

}

}

This works fine but is not exactly very clean and certainly isn’t the way to go when dealing with a lot of synonyms. If you need to do that put them in an extra file.

Languages

Does your data hold information in specific languages? Are special characters in use? Do you have to deal with full text? Consider using a language analyzer. With that you'll get all the special characters, stopwords you don't want to score (like “a”, “and”, “the” etc.) and stemmers which reduce words to their root form.

Are several languages in use on the same field? Make a field for each language and analyze it with the matching language analyzer. Speakerinnen Liste for example has a field for both English as well as German biographies. Then later simply query both fields.

Query

Queries can obviously vary a lot depending on what you aim to achieve. Here are some things which helped me:

-

Don't use term queries

When building a query better not use term queries unless you really know what you are doing. Match queries and all related queries conveniently use the same analyzers for search as for indexing. Which term queries do not! This usually means unexpected results.

-

multi_match

For Speakerinnen Liste we went for the multi_match query with which you can run your query on multiple fields. This makes sense since we don't know the user input and have to check all our fields anyways.

Types

-

multi_matchallows for several types, the default being best_fields. This is a good choice if searching for several words found in the same field. Which is most likely not true for our application. Basically we don't know what the users will enter, it might be just one word, it might be multiple words from one field (e.g. a full name like “Anne Mustermann”) or multiple words from several fields (e.g. a topic and a first name like “Ruby Anne”). -

cross_fields does the trick: it looks for all terms in all fields (and not a term combination). Also it somewhat evens out the scoring as it treats all fields with the same analyzer as one big field.

If you want to fiddle around further check out tie_breaker (for per-term blended queries only). With this you can influence how the scores of matching fields are added.

A Little Excursion

Don’t use fuzzy querying!

People can’t spell. This is something to take into account. But how?

My first try was to use fuzziness in the query but I soon found out that this is not advisable in full-text search.

In our case with a standard fuzzy query the result was way too many hits. What's more: hits that didn’t seem to make sense!

For example when searching for the first name “Maren” I also got hits for speakers mentioning “Care Work” and “AR / Augmented Reality”. What was happening?

I used language analyzers combined with a fuzzy query. Contrary to what I expected it wasn’t the words as a whole which got compared but the output of the German analyzer. Which includes a tokenizer and a stemmer.

Through this “Maren” became “mar” which then got compared to “car” and “ar”. Which are so similar that when fuzziness: “AUTO” is added, they produced hits.

(Also see Levenshtein distance, the transformations needed to change one word into another. In the above examples it is always one.)

Take away:

- When using language analyzers really think about if you want the included stemmers. If you don’t need them at all you can reimplement any language analyzer and just throw out the stemmers.

- Again: Do not use fuzzy queries!

Use suggesters instead

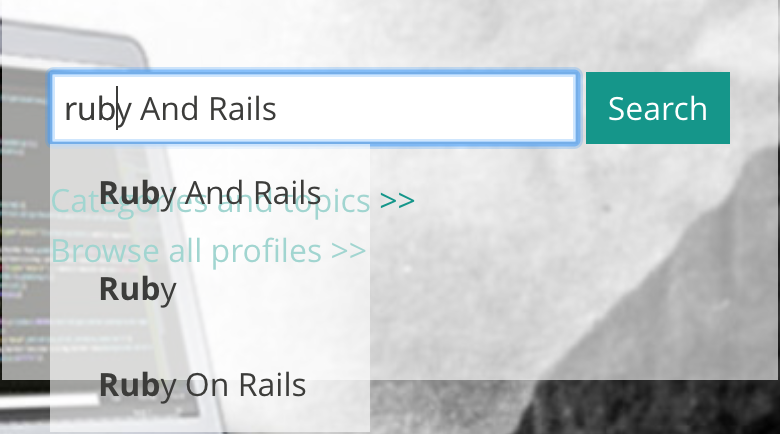

Spelling remains hard. So how can we provide the users with results they expect when they can’t provide the necessary correct input? By making them type less. Using completion suggesters is a great way to prevent typos and possible subsequent zero matches.

Since I had a Rails application at hand, I used Twitter’s typeahead.js, a JQuery plugin, together with bloodhound, a suggestion engine. This was not too difficult, especially since I followed Jason Kadrmas’ tutorial.

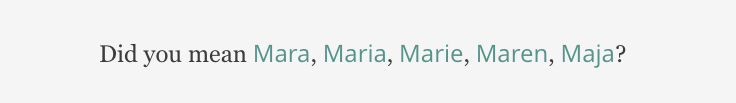

Another good idea is to implement did-you-mean suggesters, softening frustrations that occur in case of zero matches.

It can look like this:

query: {….}

suggest: {

did_you_mean_fullname: {

text: query,

term: {

field: 'fullname'

}

}

}

Tweaking The Results

Following are some things that have proven useful to me.

Use explain

You can have a detailed look at what is going on under the hood by using Kopf s rest feature. It allows you to pass a request as a POST query and turns out the hits including scores. When adding

"explain": true,

before the query, you'll also get a detailed breakdown of how the score came about for every hit. Although this is not readily understandable, by skimming you'll see on which fields and for which terms a match got weighed in on the scoring. This is especially useful when getting completely unexpected hits.

Boosting

Usually the first thing which comes to mind. When querying several fields you can give a positive (>1) or negative (<1) boost depending on the importance of fields.

When using multi_match just add the ^ and the intended boost to the field name:

fields: [

'fullname^1.7',

'twitter',

'topic_list^1.4',

...

]

Play around a little. With Speakerinnen Liste we don't know which fields the query will hit, therefore we don't want the scoring range to spread out too much, which is generally a good advice. Remember your main use case and the other decisions you made: We gave the name field and main topic the hightest boost since these were identified as most likely to be searched for. Speakerinnen Liste has a facetted search which allows to filter for countries, cities and languages. Which means that these fields don't need much of a boost. And then there is the possibility to give a negative boost (<1). This we used on biographies, a full-text field with somewhat unpredictable user input (length varies highly, also repetition of terms etc.). We still wanted it to be included in the search, but without skewing the results to much.

minimum_should_match

Scoring is one of the great things about Elasticsearch. However, sometimes it is difficult to get just the right amount of hits. minimum_should_match can be part of the solution. It lets you control the number of terms (absolute or relative) a document needs to match to produce a hit.

Split queries

Somehow things just won't go right? Maybe you are trying too much in one place. Think about splitting your query. This way fields can be treated differently, e.g. a different type can be used.

Realizing that the biographies were messing with our results, we didn't include them in the main cross_fields query but rather seperated them:

{

min_score: 0.14,

query: {

bool: {

should: [

{

multi_match: {

query: query,

# term-centric approach. First analyzes the query string into individual terms, then looks for each term in any of the fields, as though they were one big field.

type: 'cross_fields',

fields: [

'fullname^1.7',

'twitter',

'split_languages',

'cities.standard^1.3',

'country',

'topic_list^1.4',

'main_topic_en^1.6',

'main_topic_de^1.6'

],

tie_breaker: 0.3,

minimum_should_match: '76%'

}

},

{

multi_match: {

query: query,

# field-centric approach.

type: 'best_fields',

fields: [

'bio_en^0.8',

'bio_de^0.8'

],

tie_breaker: 0.3

}

}

]

}

}

}

The End

So this is basically it! See the whole thing (as of time of publication of this post). Phew! That was a long text. Thanks for staying!

P.S.

Are you female identifying? Not yet on Speakerinnen Liste? I bet you are an expert on something! Register now!

Are you a conference organizer? Get more women* onto the panels and use Speakerinnen Liste.(There are also online resources around to help you achieve this, for example check out this organizer's experiences.) You can do it!

Everyone: Encourage the women* in your life to hit the stage!

-

The driving forces behind this project are Maren Heltsche and Tyranja. Absolutely watch out for them! Speakerinnen Liste is the result of the very first Rails Girls Berlin learners group, the Rubymonsters (yay!), and is therefore a Rails application. ↩